How to Use Cosine Similarity for Vector Search in pgvector

Lately, to deepen my understanding of Retrieval-Augmented Generation (short: RAG), I have been developing a local RAG setup from scratch with a focus on open-source technology. Doing this from scratch and coding as many components myself has allowed me to explore how different components of such a system interact, including vector storage and retrieval.

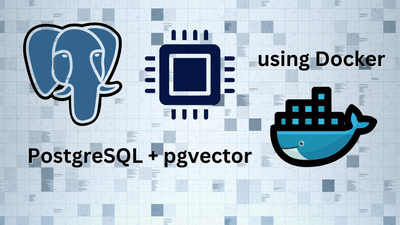

Today, I want to discuss how to effectively search stored vector embeddings using PostgreSQL and its extension, pgvector.

Prerequisites and Used Technology

Before diving into vector search, ensure you have the following setup:

- PostgreSQL with the pgvector extension installed.

- Access to an embedding model for generating vector embeddings.

- Python with

psycopg2for executing SQL queries programmatically or any other way to access the database for your app purposes

I have written a detailed guide on setting up pgvector with Docker that you can follow to get started.

For this tutorial, I use Obsidian markdown notes as my dataset. These notes are embedded currently using OpenAI's text-embedding-3-large model and stored as vectors in a PostgreSQL database with pgvector running locally via Docker. However, this approach can be applied to any text-based dataset with any text embedding model.

Understanding Cosine Similarity for Vector Search

When performing a search query, we need to identify which stored notes are most relevant to the user-input. The key idea is to embed the search query using the same text embedding model and then compare it with the stored embeddings using a similarity metric.

Why Use Cosine Similarity?

The short answer for this specific post is: because it's the default. The slightly longer answer is that this is the metric often used for training vector embeddings.

If you are using text-embedding-3-large by OpenAI or a similar model, you should just listen to their advice: "We recommend cosine similarity. The choice of distance function typically doesn't matter much." (see https://platform.openai.com/docs/guides/embeddings)

If you are using another model for text embedding, you can see if they also have an FAQ on which similarity metric to use, but most of the time, you won't go wrong with cosine similarity.

Mathematically, cosine similarity between two vectors A and B is defined as:

$ \cos(\theta) = \frac{\mathbf{A} \cdot \mathbf{B}}{|\mathbf{A}| |\mathbf{B}|} $

Where:

- A ⋅ B is the dot product of the two vectors.

- ||A|| and ||B|| are the magnitudes (L2 norms) of the vectors.

- θ is the angle between the vectors.

- The result ranges from -1 (completely opposite) to 1 (identical), with 0 indicating no similarity.

<=> operator), meaning lower values indicate higher similarity. The similarity score can then be computed as: cosine_similarity = 1 - cosine_distanceImplementing Cosine Similarity in PG Vector

1. Storing Vectors in PG Vector

First, we store text embeddings as vectors in a PostgreSQL table.

CREATE TABLE IF NOT EXISTS documents (

id SERIAL PRIMARY KEY,

title TEXT,

start_of_chunk TEXT,

embedding VECTOR(1600), -- insert the embedding dimension size that your model outputs

text_chunk TEXT

);

INSERT INTO documents (title, start_of_chunk, embedding, text_chunk) VALUES (%s, %s, %s, %s); -- pass parameters or hard-code values, I guess

2. Querying for Similar Notes

Top K Results

To find the most relevant notes for a given query, we embed the query and compare it with stored vectors using cosine similarity:

SELECT title, text_chunk, 1 - (embedding <=> '[QUERY_VECTOR]') AS similarity

FROM documents

ORDER BY similarity DESC

LIMIT 5;

Here’s how it works:

<=>calculates cosine distance (lower = more similar).1 - cosine_distanceconverts it into cosine similarity (higher = more similar).ORDER BY similarity DESCsorts results from most to least similar.LIMIT 5returns the top 5 relevant notes.

Flexible number of results based on threshold

Instead of retrieving the top K results, you can filter results based on a similarity threshold. If you always retrieve 5 results, but only have 3 matching documents, you would end up retrieving irrelevant information with the above strategy that could end up confusing the final generated answer.

Here's how to query using a threshold instead of a fixed limit:

WITH similarity_calculation AS (

SELECT title,

start_of_chunk,

text_chunk,

embedding,

1 - (embedding <=> '[QUERY_VECTOR]') AS cosine_similarity

FROM documents

)

SELECT title,

start_of_chunk,

text_chunk,

embedding,

cosine_similarity

FROM similarity_calculation

WHERE cosine_similarity > 0.5;

This approach:

- Computes cosine similarity for all stored documents. (Careful if you have a looot of documents - I had ~500 and it was completely fine in terms of performance)

- Filters out documents with similarity below the chosen threshold (e.g., 0.5).

- Ensures that only sufficiently relevant results are returned.

3. Running the Query in Python

To integrate this with Python, I use psycopg2 to connect to PostgreSQL and execute the search query:

import psycopg2

import numpy as np

# Connect to PostgreSQL database

conn = psycopg2.connect(

dbname="embedding_db",

user="dev_user",

password="dev_password",

host="localhost",

port="5433",

)

cursor = conn.cursor()

# Example query vector (replace with an actual embedding)

query_vector = np.random.rand(1536).tolist()

# Execute PG Vector search

query = """

WITH similarity_calculation AS (

SELECT title,

start_of_chunk,

text_chunk,

chunk_id,

embedding,

1 - (embedding <=> %s::vector) AS cosine_similarity

FROM documents

)

SELECT title,

start_of_chunk,

text_chunk,

chunk_id,

embedding,

cosine_similarity

FROM similarity_calculation

WHERE cosine_similarity > 0.5;

"""

cursor.execute(query, (query_vector,))

# Fetch and display results

results = cursor.fetchall()

for title, content, similarity in results:

print(f"{title} (Similarity: {similarity:.2f})")

cursor.close()

%s string placeholder and convert it into a pgvector-vector using the %s::vector syntax, which type-casts the string into a vector (:: being the cast-operator in PostgreSQL).Understanding Distance vs. Similarity Thresholds

It is crucial to understand that distance and similarity are inversely related:

- Cosine distance (PG Vector default): Lower values = more similar.

- Cosine similarity (what we typically expect): Higher values = more similar.

To adjust the search results, set a threshold for similarity:

- 0.5 (broad results): Captures loosely related notes.

- 0.7–0.8 (strict results): Retrieves highly relevant content.

These thresholds are again based on common values found online. Since I want to chat with all my notes relevant to the topic, I chose a lower threshold of 0.5, but I encourage you to try out different values between 0.5 and 1 based on a few examples in your use-case to adjust how many results you will retrieve per query.

Limitations and Considerations

While this approach worked completely fine for my limited use-case of chatting with my personal notes, it's basically guaranteed that it will fail for larger use cases. Here are some alternatives you can consider within pgvector.

- Scalability: By default, pgvector implemented exact nearest neighbor search, which has been completely fine with my ~500 documents. For large-scale retrieval, consider using an index (https://github.com/pgvector/pgvector?tab=readme-ov-file#indexing), which will then use approximate nearest neighbor search.

- Alternative Metrics: Pgvector also supports L2 distance, inner product, L1 distance, Hamming distance and Jaccard distance (see documentation on the repo: https://github.com/pgvector/pgvector?tab=readme-ov-file#querying)

Of course, beyond that there are also other dedicated vector DBs to use like ChromaDB, Weaviate, FAISS or something fully managed like Pinecone.

Conclusion

Using cosine similarity in PG Vector provides an efficient way to search for relevant text-based entries. The combination of OpenAI embeddings, PostgreSQL, and SQL queries enables fast and flexible vector retrieval.

By applying this technique, you can:

- Store any text dataset as embeddings.

- Perform semantic search over your notes.

- Tune your similarity threshold for better results.

- Integrate with Python for a dynamic retrieval system.

If you’re building your own local RAG system, I’d love to hear about your experiences - drop a comment below or check out my other posts for deeper insights!

No spam, no sharing to third party. Only you and me.

Member discussion